Generative AI: Shaping Health Care through Safe and Responsible Use

Generative AI is one facet of the AI spectrum and can create new things like stories, pictures or music. It learns from existing data and uses this knowledge to create new content. While Houston Methodist continues to be one of the top health care systems leveraging generative AI, we’ve also taken a strong stance on using this technology safely and responsibly to maintain trust from our clinicians and patients.

Understanding AI

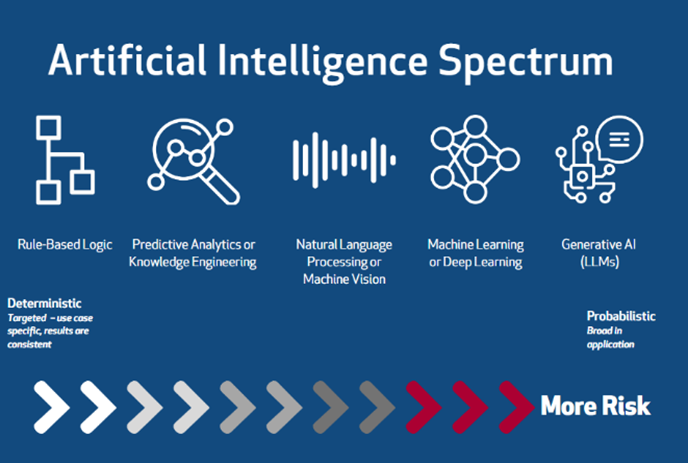

The range of AI spans from rule-based logic to generative AI (large language models/LLMs). Between these two are other AI approaches and techniques to solve problems and complete tasks but as much as this range varies in use, it also varies in risk.

Rule-based logic has existed for decades and typically presents the least risk. It relies on predefined rules or logical conditions to provide consistent insights, like the spell check feature on your phone. This tool, for example, knows that when you type “theif” that you most likely mean “thief,” and it may learn new rules based on the words you commonly misspell but correct later.

Generative AI is on the other side of the spectrum and can be riskier for various reasons. It’s more like a magical artist that sees thousands of pictures, then uses those examples to paint its own picture, or a storyteller who reads thousands of books, then uses that content to create a new story. These tools are simply attempting to predict what you want to hear — it doesn’t have to be 100% factual, and it can be difficult to figure out how the tool generated the answer.

Publicly available generative AI tools typically learn from end users’ input, its training on vast datasets and by accessing external data, but this data is sometimes dated or contains biases, resulting in inaccurate responses. That’s why it’s imperative that all responses are validated by the end user. More secure and private versions of generative AI models can be set up that don’t retain inputs or use those inputs for further training. This helps to mitigate some privacy and data protection concerns. However, there are other risks.

Publicly Available Generative AI Risks

Tools like ChatGPT and Meta AI are generative AI tools available to the general public. These sources give anyone the ability to experiment with and create AI-generated content. While they provide remarkable creative potential, they also pose various risks.

One of the many risks is their limited data protection, meaning they lack safeguards to protect sensitive or personal information, leaving your data vulnerable to misuse or exploitation.

Another risk is they often lack advanced privacy protections. They’re not governed by any specific privacy standards or guidelines and often lack robust security measures to protect against unauthorized access, data breaches or cyberattacks. To provide responses, they’re trained on large datasets that may not have stringent protocols in place to ensure that data is ethically sourced or anonymized, opening the door for privacy breaches. This is why sensitive information, such as Protected Health Information (PHI) and Personally Identifiable Information (PII) should never be entered into these public sites.

Mitigating Risks – from Houston to The White House

Safe and responsible use — particularly in a health care setting — is essential to maintaining patient safety, medical ethics and trust, data integrity and security and regulatory compliance. As AI technologies become increasingly integrated into our clinical practices, it’s essential that we work to minimize any potential risks on both a national and HM system level. We want to harness the benefits of AI for better health care delivery, while ensuring our patients’ safety, privacy and trust.

To tackle this, HM recently established an AI Oversight Committee. This committee is comprised of stakeholders from Clinical Informatics, Innovation, Information Technology, Quality and Research Analytics and Legal and Privacy and will proactively review proposed uses of AI technology and specific use cases for that technology to evaluate and mitigate potential risks. Their charge is to create clear guidelines, standards and best practices for HM AI tools, with a collaborative focus on ensuring a human is always in control, prioritizing practical solutions that add value, implementing technology responsibly and developing trusted partners that consistently follow our recommended best practices.

On a national level, on October 30, 2023, President Biden signed an Executive Order on the Safe, Secure, and Trustworthy Development and Use of Artificial Intelligence. This EO demonstrates the Biden-Harris Administration’s commitment to governing the development and use of AI responsibly and safely, particularly in health care settings, while safeguarding patients’ privacy and security. To achieve this, they’re partnering with leading health care providers and payers to commit to safe, secure and trustworthy use and purchase and use of AI in health care. HM is among the 28 they’re partnering with to achieve this goal (read this statement from The White House).

“My intent in participating in conversations about AI in health care at both national and local levels is to ensure we’re having realistic conversations about feasibility and the risks associated with the application of AI, in a setting where trust with our patients and clinicians is our primary focus,” said Dr. Jordan Dale, chief medical information officer. “We want to ensure practical, high-value applications are prioritized and promoted, while other use cases are scrutinized with proper oversight and rigor to maintain high trust.”

Dr. Dale continued, “No one thinks about the use of spell check in their everyday life, but many pause and want to ask questions when they step into a driverless car. Additionally, as a health system, we want to promote human contact and empathy where it is of most value to patient care and focus this technology where we can empower more of those human connections.”

Safely Leveraging AI for Better Patient Care

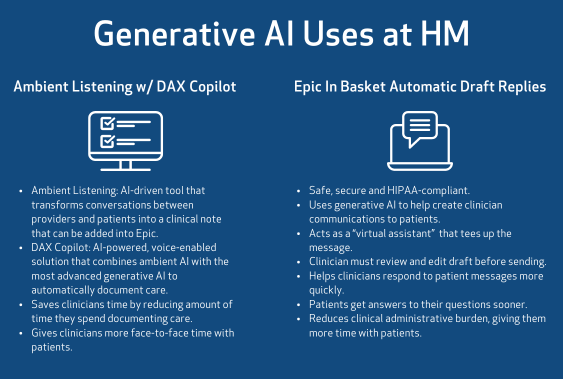

HM currently leverages some generative AI applications in clinical settings, but these tools are directly integrated into specific devices or systems, where they can safely and securely perform certain tasks, in a protected, controlled environment. In these instances, it can do things like analyze large clinical data sets and help reduce the time clinicians spend on time-consuming research and analysis, allowing them more time to focus on their patients. This is an example of how we can benefit from AI capabilities, yet safeguard data privacy and security.

We’re currently using AI-driven platforms and chatbots to help us connect with patients, which are improving efficiency. We’re also using generative AI tools in other areas, such as ambient listening with the DAX CoPilot and to automate patient message replies in Epic In Basket.

Final Thoughts

AI tools continue to lead health care into a new era of innovation and potential, offering unprecedented opportunities for better patient care delivery and streamlined clinical workflows. As these sophisticated AI systems revolutionize health care and reshape the health care landscape, we must remain diligent in prioritizing safe and responsible use, so we can continue to provide our patients with the unparalleled safety, quality, service and innovation they’ve come to expect from HM.